Posted on August 16, 2024

Running OpenGenera on Linux(Revised)

This is a guide for those who are either beginners or intermediate in Linux knowledge. Comfortability with the command line, compiling and virtual machines.

As the guide from 2018, linked here, is a little out of date, I’m going to instead run you through a better way to run opengenera even if you have never worked with VLM. However, I do expect the reader to have a solid foundation in computer and virtual machines. There are other guides online that will allow you to learn what I gloss over here. I have no idea what the original author did to compile the binaries on his page, but do not use them unless you are advanced in packaged debugging. Both of his compiled binaries failed to load on my test system with a Ryzen CPU. That is beyond the scope of this write up. I will also make the instructions a little clearer and explain what each one does.

That being said, you should be able to compile VLM on any modern x64 based system. As long as you have a compatible GCC compiler, your Linux distro choice is up to you. I went with Ubuntu 18.04 for ease of use and compatibility with x.org. If you want to build a bash script that loads on Wayland, that is also out of the scope of this document.

After you have setup your virtual machine with the options you want, you should start with an open terminal window. If you are unable to setup a virtual machine I would recommend you read the documentation and learn. You’re going to want to source a modern working VLM to run your genera world file. I used this one full of plenty of bug fixes(as of late 2024). The repository can be either git cloned or downloaded normally, depending on your workflow. The compiling instructions are in the INSTALL file in the repository, but as per normal you’re going to want to install gcc make before you build. The build will also require a few more dependencies, these can be installed prior to building, or after a failed build like myself.

sudo apt install gcc make (gcc is the compiler and make is the tool to build executables)

sudo apt install sbcl (this is common lisp, the language used in genera)

sudo apt install libx11dev (a X Window System protocol client library)

sudo apt-get install xcb libxcb-xkb-dev x11-xkb-utils libx11-xcb-dev libxkbcommon-x11-dev(xcb is a library implementing the client-side of the X11 display server protocol, libxkb is a keyboard handling library, others are supporting libraries and tools for each)

Some of these packages might be extraneous, however I find that It’s better safe than sorry with dependencies. You would allow it to finish and then run the commands in the linux-vlm repo. There is plenty of troubleshooting for building the executables in the INSTALL file. Unless you specify otherwise, the genera executable will end up in /usr/local/bin. You are welcome to move it from this folder, but I chose not to as this virtual machine was only built for emulating genera so it’s only going to have a mess of other files in there. If you chose to make a directory in $HOME, make sure to move the genera executable to that folder.

Now we can move gears back to the original post and collect the world and debugger files available online. Should these ever be removed, I will update this post with the current source of files. The following commands are going to be run in the directory that has the genera executable

curl -L -O https://archives.loomcom.com/genera/worlds/Genera-8-5-xlib-patched.vlod

This command downloads the “world” file- the Lisp machine saved to disk- think of it as a virtual box disc with a preinstalled OS

curl -L -O https://archives.loomcom.com/genera/worlds/VLM_debugger

This is your VLM Debugger.

curl -L -O https://archives.loomcom.com/genera/worlds/dot.VLM

This is your config file for VLM. I would recommend editing it before you move it, as the moving process tosses it in a hidden folder.

Next, you are going to want to either open a separate terminal window, or move to a different directory one the open one. From your current working directory, you are going to need to toss this command to switch to a directory in your /var folder-which holds your variable files-files that change while the operating system is running.

cd /var/lib

Once in this directory you’re going to need to download the var_lib_symbolics tarball.

sudo curl -L -O https://archives.loomcom.com/genera/var_lib_symbolics.tar.gz

and untar it

sudo tar xvf var_lib_symbolics.tar.gz

Once that is done you are going to need to change permission to the user that will be using the VLM. I am going off the assumption that the user is UID 1000 and GID 1000, but please double check your values by simply typing id in the terminal

sudo chown -R 1000:1000 symbolics

Heading back to the original directory, you need to edit the dot.vlm file. I personally use nano, but vi is acceptable too

sudo nano dot.VLM

once in the editor you’re going to need to change the

“genera.worldSearchPath: /home/seth/genera” to

“genera.worldSearchPath: /path/to/directory“. In my case, it was /user/local/bin as that’s where the VLM executable was dropped and where I curled all the files to originally. Unless you want to work with different *internal* IP addresses for genera exclusively, you can leave all the other options alone, and save and close the file. Once closed you can move it to the hidden directory.

mv dot.VLM .VLM

The next bits are manually setting up hosts, time&daytime services, nfs and the tap0, all needed for old UNIX operating systems, but modern linux has no need for them anymore. We are going to download them in any order as long as you do every option.

The Hosts File

You need to setup the hosts file to match the internal network that the VLM needs. These can be changed, but for the sake of simplicity , I am going to leave them exactly what they were in the dot.VLM conf.

sudo nano /etc/hosts

In this file you’re going to add two entries exactly as follows

192.168.2.1 genera-vlm

192.168.2.2 genera

write to disk and exit.

Time and Daytime Services

Genera uses the old time and daytime unix facilities to set its clock. They must be installed and configured.

sudo apt install inetutils-inetd

Now you’ll need to edit the configuration file for use.

sudo nano /etc/inetd.conf

You’re going to want to replace the contents, or just add if all the information is commented out.

time stream tcp nowait root internal

time dgram udp wait root internal

daytime stream tcp nowait root internal

daytime dgram udp wait root internal

Then restart the service

sudo systemctl restart inetutils-inetd.service

You can check if this worked by using telnet on localhost 37 or 13. It is normal for the connection to close the service.

NFS Services

Genera is going to need to access files on the local host, and this would be the most simple service to use. You can install NFS with the following command.

sudo apt-get install nfs-common nfs-kernel-server

You will also need to edit the NFS configuration file. This guide is going to assume you are using Ubuntu so you can use the following line without editing. Adjust your anonuid and anongid if on a different user account or distro.

/ genera(rw,sync,no_subtree_check,all_squash,anonuid=1000,anongid=1000)

Make sure the above lines are on the same line in the conf file, and space in between / and genera. Then restart the service

sudo systemctl restart nfs-kernel-server

You are welcome to check if the filesystem has been exported correctly use the following line, or else move on.

sudo exportfs -rav

You also need to edit another nfs configuration file to tell NFS to use V2, in this case anything after Ubuntu 17.04. This guide is assuming you are running 18.04 so this is also required.

sudo nano /etc/default/nfs-kernel-server

You’re going to need to change these two lines

RPCNFSDCOUNT=8

RPCMOUNTDOPTS=”–manage-gids”

to

RPCNFSDCOUNT=”–nfs-version 2 8″

RPCMOUNTDOPTS=”–nfs-version 2 –manage-gids”

Then restart the service.

sudo systemctl restart nfs-kernel-server

Creating the tap0 interface

sudo apt install uml-utilities

Edit the interface file

sudo nano /etc/network/interfaces

Then add the following lines to /etc/network/interfaces

auto tap0

iface tap0 inet static

address 192.168.2.1

netmask 255.255.255.0

pre-up /usr/bin/tunctl -t tap0

If this method fails to work for you, you can manually do this on every reboot of the VM

sudo ip tuntap add dev tap0 mode tap

sudo ip addr add 192.168.2.1/24 dev tap0

sudo ip link set dev tap0 up

If all instructions have been followed up to this point you should have a working install of opengenera. you are welcome to start the executable by running

sudo ./genera

from the directory. If you do not run as sudo, then any changes you make in genera will not write to disk when you set settings and reboot VLM

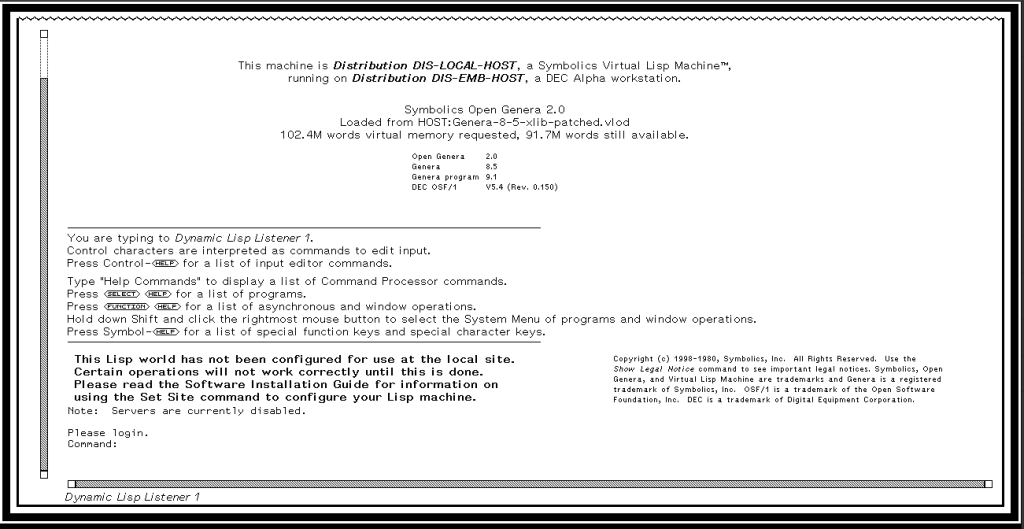

from here you should have a login prompt in a new window for opengenera! Enjoy Lisp!

In the terminal you will type:

Posted on March 5, 2024

I’m Back

Just over 4 years away from the blogging world and plenty has occurred outside of this little corner of the internet. Just a few major things of note, this website is on a new host courtesy of a local friend and other one that might have been noticed on the home page I am no longer in Sonoma County. This was a side effect of several personal events that happened in 2020 during the COVID pandemic that racked the globe. I have majorly upgraded both my workspace and collection to the point of busting at the seams. I am in desperate need of a large workspace at this point.

In terms of retro computing; oh boy buckle up– because so much has happened in 4 years. Last time I posted my collection was incredibly small, and had a few Silicon Graphics workstations and several Apple machines. Since then, this collection has ballooned into an amalgamation of a general year range of 1987 and 2005, with the only things not collected yet being minicomputers from the late 70s and early 80s, and incredibly rare or expensive systems. I have even owned a SGI Crimson in that time- a machine I thought I would never be able to call my own. It has since been handed off to a museum so they could give more care to it that I ever could (despite them killing it a few months after they set it in the museum, allegedly). I have also managed to secure multiple Amigas- a long time coming from the single 500 I owned in 2019. I am also now the owner of 3 network racks, something I said years ago I’d never do. Whats worse is that I’m in the market for a fourth. I have upgraded my repair skills from simple troubleshooting and software installations to being somewhat skilled in soldering and hardware repair and advanced troubleshooting. I have yet to have any clients beyond my friends and family; but that might change in the coming years as I am getting much better at trusting my skill level. I have also become a regular exhibitor at Vintage Computer Festival West, having displayed in 2021, 2022, 2023 and soon to be 2024. This collection is somewhat fluid, with plenty coming and going over the years as my interest in certain machines ebb and flow. The major common denominator between most has been a special interest in rackmount equipment and sbc based machines as of late. (Early 2024)

Profession-wise? Yeah I’m no longer a School Bus driver, after 10 years of transportation related jobs. I am now back in college to obtain a double major in Cybersecurity and Electronics. The eventual end goal would be a Masters in Computer Science- but that’s many years down the line.

Now back to this blog. I do plan to start documenting my projects so all of you can see the repairs and upgrades- both the good repairs and the cursed ones (as I’m a firm believer of “if it works, I’ll use it”). I will not give any kind of an update schedule at this time due to my proven lack of ability to stick to a schedule-they’ll come as I work through projects.

Posted on December 4, 2019

Antminer S3 ASIC Teardown #1

I obtained 2 Antminers this week from the local classifieds. They came without power supplies, and there was little to no information about how they function inside, so research was required into the chips themselves. I started tearing down one to see what made these machines tick. The most I could get from google is this little snippet: Model Antminer S3 from Bitmain mining SHA-256 algorithm with a maximum hashrate of 478Gh/s for a power consumption of 366W. That means its going through 478 Billion hashes every second, at 366W. That is about the same power usage as business class computer. They are also at 3 years old at this point so no longer profitable for bitcoin mining as of 2019

After a little more searching I found that the 2 boards in the system have the BM1382 chip. This a Bitmain produced Bitcoin mining ASIC chip with a core frequency of 250mhz. Take this number with a grain of salt, as this chip is built for mining bitcoins, and is extremely efficient at what it does. Each system board is market S3 Hash Board V1.3 and holds 16 of the ASIC chips. Each board also have direct power from a 2 6pin sockets, requiring 4 6 pin psu connectors to power the unit.

Each board connects to a controller board with a 20 pin cable. The controller board has a RJ45 jack for connecting the device to the internet, and obviously to configure it from a self hosted website, like a firewall or router. The controller board has a PIC32MX which is a mips based microcontroller that controls the ASICs and a ThinkPHY2 which is also a mips based processor, bu this one is featured for network. The ThinkPHY2 includes a MIPS 24K processor, five-port IEEE 802.3 Fast Ethernet Switch with MAC/PHY, one USB 2.0 MAC/PHY, and external memory interface for serial Flash, There is also a reset button on the board, and two unpopulated spots on the board, one for LAN and one for debug.(I might have to get a UART debug device and solder it to the circuit board.)